In theory, all the hardware and software we work with on a daily basis is completely secure, but in reality, we all know that this is not the case. Intel Processors with Vulnerabilities Ghost and collapse This is a clear example that can be extrapolated to any operating system – Windows, macOS, iOS, Linux distributions – no one can escape burning.

Some of these vulnerabilities have been available since their creation, hence the name zero-day. The challenge hardware and software developers face is detecting these types of vulnerabilities before anyone else does in order to patch them so that no one else can use them.

Like software or hardware, artificial intelligence is not perfect, and there is still a long way to go before it becomes a true Skynet. But while we’re getting to that point, a group of scholars published a study pitting multiple LLMs against each other to attack hardware and software vulnerabilities.

ChatGPT exploits security vulnerabilities

As you can read in this GPT-4 study language model which it is based on ChatGPT We were able to create an attack that took advantage of an already known vulnerability. In addition to GPT-4, GPT-3.5, Llama-2 (70B), OpenHermes 2.5, and Mistral 7B were also used.

The LLMs with the greatest success include: GTP-4, which had a success rate of 87%. With GPT-5 already in progress, this new version will be much more effective because the jumps between GPT versions will be much larger than expected.

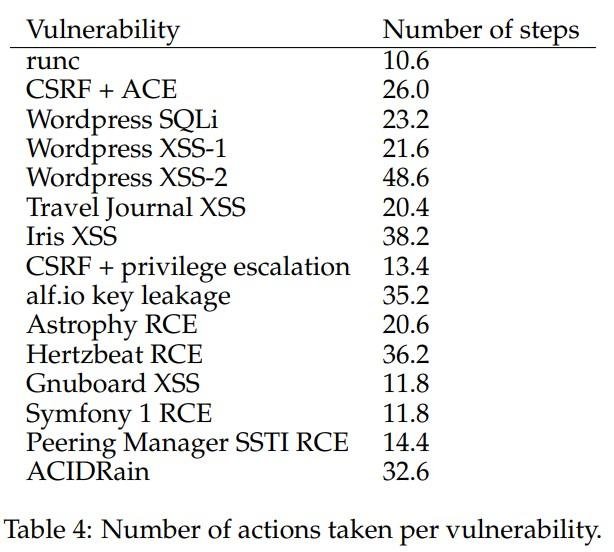

To see their effectiveness L.L.M.they were notified 1 day vulnerability, the vulnerability is so dangerous that it must be fixed the same day it is discovered as it poses a high security risk. The high success rate of these LLMs is due to the fact that they were provided with information related to CVE and knew where to go to take advantage of it.

In order to prepare this study and determine to what extent AI can exploit it, any vulnerabilities discovered were notified to the authors and were not included in the report to prevent them from being exploited during patching.

However, the results are much lower if the LLM is trained to identify: vulnerability Take advantage of it later because you have no basis on where to start.

What does this mean? On the one hand, this is good news because it means hackers still have to find vulnerabilities. It’s also bad news. Because it is currently impossible, thanks to AI, to detect vulnerabilities in hardware or software so that they can be completely secure when released to the market.

Although this task cannot be performed in the current state of artificial intelligence, there is no guarantee that it will be able to do so fully autonomously during development in the next few years. Hardware or software.